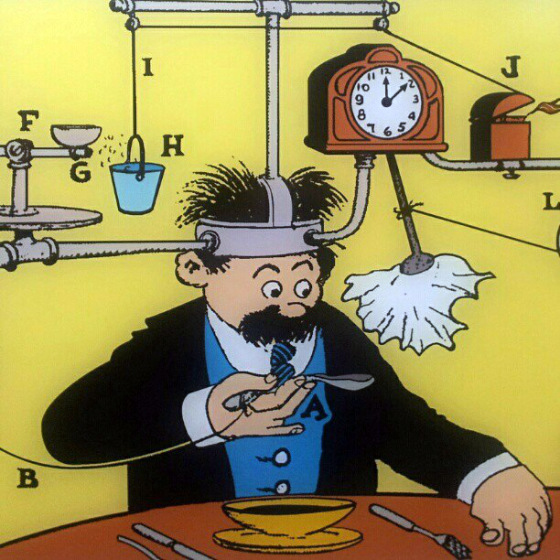

Rationalism’s promise, that rationality works uniformly and universally, runs into difficulties when it encounters nebulosity. It tries ignoring the trouble, but nebulosity won’t go away. Then rationalism adds more machinery to the contraption.

Rationality aims mainly at finding true beliefs. Part One of The Eggplant catalogs a series of rationalist theories of truth and belief. Each fails in an apparently different way—although nebulosity is the underlying cause in each case. As it becomes apparent that each theory is inadequate, rationalism bolts on additional complications in an attempt to handle the problem, producing another, more complex theory.

The five sections of this chapter explain this general evolutionary pattern of rationalist theories, and how Part One addresses it.

- Meta-rationalism, the view of The Eggplant, is not a philosophical theory; it’s practical, not theoretical.

- Rationalism is a philosophical theory, so we have to discuss it partly in philosophical terms.

- Rationalism, encountering nebulosity, attempts to reinterpret it as linguistic vagueness, or as uncertainty. That doesn’t work because nebulosity is distinct from the other two issues.

- Rather than accepting nebulosity as the underlying cause, which needs to be treated at the root, rationalism tries to work around particular symptoms by adding formal machinery. In each case the machinery doesn’t do even that, and instead creates counter-productive complexity.

- Part One roughly recapitulates the history of rationalism from the late 1800s (when formal logic was invented) to the 1960s (when rationalism conclusively failed). However, Part One’s structure follows the intrinsic pattern of successive inevitable problems and their attempted solutions, rather than a historical narrative.

Eventually, rationalism became a unwieldy mess of ad hoc machinery that still couldn’t explain key observations. One may simply hope someone can somehow make it work someday. However, analysis of the anomalies, followed by fundamental re-thinking, leads naturally to the quite different, meta-rational alternative.

Rationalism is philosophy; The Eggplant isn’t

Part One may sound like philosophy at first. Reading it like that might result in missing its point.

Rationalism is a philosophical theory. It’s difficult to point out problems with a philosophical position without sounding like philosophy. Also, only philosophers have treated many of the topics of this Part. So, unfortunately, it’s impossible to avoid using some philosophical jargon terms—explained in the next section.

However, The Eggplant is not philosophical. The book is not about clever arguments, or seeking the ultimate truth of some matter. Its sources and goals are practical, not theoretical. The aim is mundane: more effective ways of thinking and acting in technical work.

Rather than attempting to conclusively refute rationalism,1 Part One shows how it encounters predictable patterns of practical problems when it collides with nebulosity. What do these difficulties imply for our use of rationality? Specific ways rationalism fails point to specific features of the meta-rational alternative (explained in Part Three). You may come to find that more useful and more plausible.

The problems I point out are well-known and widely discussed. I review them here only because I couldn’t find a discussion elsewhere which covers the issues with minimal philosophical and historical baggage, and at the right level of detail. For readers interested in exploring further, Part One frequently footnotes The Stanford Encyclopedia of Philosophy. Peter Godfrey-Smith’s Theory and Reality is a good introduction to the philosophy of science, and covers several of the issues.

My presentation of these well-known difficulties is unusual in pointing out how each stems from the same root: failure to take nebulosity into account. This pattern recurs because rationalism’s goal is to prove that rationality is guaranteed correct or optimal, and nebulosity makes that impossible.

After explaining each problem, Part One summarizes an alternative, meta-rational approach, which works with nebulosity effectively. These brief discussions foreshadow more detailed explanations in later Parts, which will sound much less like philosophy. Parts Two and Three are based on detailed observations of how people actually do things, and sound more like anthropology. Parts Four and Five are pragmatic guides to how to use rationality; they sound more like engineering, or research management.

The problems rationalism treats as theoretical and philosophical, for which it wants to find uniform, universal, formal solutions, meta-rationality treats instead as practical hassles. Hassles can’t be “solved,” but they can be managed reasonably effectively by devising social practices and by engineering physical objects.

Unavoidable philosophy jargon

“Epistemology” and “ontology.” If you find these terms from philosophy off-putting, you are in good company. They are ugly, and it’s hard to remember which is which.

However, rationalism describes itself as a theory of epistemology, so we can’t do without that one. And ignoring ontology is its fundamental failing, so we’re stuck with that too.

An epistemology is an explanation of knowing. For academic philosophy, key epistemological questions are: “What is knowledge? What is a belief? How can we get true beliefs and eliminate false ones?” In this book, we’ll be more interested in everyday epistemological questions like “should I take the grocer’s word for it that Thai eggplants can substitute for regular ones?” and scientific questions like “how do we know whether zinc prevents colds?” But ontology is more important than epistemology for The Eggplant.

An ontology is an explanation of what there is.2 Key ontological questions for philosophy are: “What fundamental categories of things are there? What properties and relationships do they have?” For The Eggplant, ontology is more about “do Thai eggplants count as eggplants at all” and “does this new respiratory virus count as a ‘cold’ virus?”

Answers to ontological questions mostly can’t be true or false. Categories are more or less useful depending on purposes. Borges’ fictional encyclopedia3 categorizes animals as:

- those that belong to the Emperor,

- embalmed ones,

- those that are trained,

- suckling pigs,

- mermaids,

- fabulous ones,

- stray dogs,

- those included in the present classification,

- those that tremble as if they were mad,

- innumerable ones,

- those drawn with a very fine camelhair brush,

- others,

- those that have just broken a flower vase,

- those that from a long way off look like flies.

This is a bad ontology, for any imaginable purpose, but it is not false.

Ontology is intrinsically, irreparably nebulous. Whether or not an object belongs to a category often does not have a definite, true or false answer. For example:

- It may be gradual: When does a rotting eggplant cease to be an eggplant and become something else? That’s a “gray area.”

- It may be purpose-dependent: Whether or not there is water in the refrigerator depends on why you care. If you are looking for something to drink, an eggplant probably won’t do—but if you are in a bio lab and the small amount of water vapor continually emitted by an eggplant would ruin the sample you were thinking of storing in the refrigerator, a warning that there is water in the refrigerator could be important.

“Nebulous” does not mean subjective, arbitrary, or merely in your head. An eggplant is not a good way to slake thirst; and that is not a matter of opinion.

Ontologies can’t be true, but some are effective for their purposes. Organizing tasks in your to-do list software into projects and categories may improve your life.

Ontological questions depend on context and purpose. Contexts and purposes are endlessly variable. I’ll often use the term unenumerable to point to the practical impossibility of taking into account all factors that could potentially bear on specific situations. Useful truths must have practical implications for concrete problems. Universal, absolute truths cannot take into account unenumerable factors—and so mostly cannot be useful.

Meta-rational epistemology takes this nebulosity of truth, knowledge, and belief into account.

Vagueness, uncertainty, and nebulosity

Let’s consider three obstacles to rationality, and how rationalism addresses them:4

- Representational vagueness:

- Inability to fix quite what a sentence, formula, or model means, and so how it relates to reality.

- Epistemological uncertainty:

- Both “known unknowns”: whether a statement is true or false due to insufficient evidence; and “unknown unknowns”: relevant factors whose existence you are unaware of.

- Ontological nebulosity:

- The fizzy, fuzzy, fluid indefiniteness of the world itself.

These all have a similar flavor—they could all be covered by a word like “fuzziness.” They all make it difficult to say that particular statements are definitely true or false. Ultimately, they are inseparable, and they are often confused in practice. However, the conceptual distinctions are helpful.

Rationalism mainly concerns itself with the first two obstacles. Those are about human cognition, so it may seem that rationality could overcome them. We can sharpen our language and gather more data, and then maybe eventually, or at least in principle, rationalism could deliver on its guarantee. Nebulosity is about the world, so it can’t be fixed, and rationalism tries to ignore it.

Encountering representational vagueness, rationalism considers ordinary language defective, and tries to replace it with alternative, more precise systems. For instance, formal logic was designed partly as an antidote to linguistic ambiguity.

Sometimes sharper representations are valuable. The move to formalism is much of what gives rational methods their power.

Fully eliminating vagueness turns out to be infeasible, however. And, the attempt is based on a fundamental misunderstanding of what ordinary language is for, and how it works. Ordinary language contains extensive resources for working with nebulosity. These get lost when you replace it with technical abstractions. Part Two, on reasonableness, explains some of them.

For meta-rationalism, ordinary language is not defective; it is well-suited to its actual purpose. Meta-rationality coordinates ordinary language’s nebulosity-clarifying methods with rational methods of formal notation.

Encountering uncertainty, rationalism asks “on what basis can we know whether this is true?” It assumes that well-formed statements are either absolutely true or absolutely false, that the problem of knowledge is to find out which, and that rationality is the solution. Of course, some things are true, and rational methods for determining truth can be highly effective in some situations.

Unfortunately, uncertainty can never be entirely eliminated, and formal reasoning methods don’t cope with it well. Probabilistic rationality handles some cases, but not all. “Unknown unknowns”—relevant factors you have not considered at all—can’t be incorporated into any formal system. Formal treatment of an uncertain fact requires specifying it in advance.

As we’ll see in Part Three, we can work effectively with unknown unknowns, but not in a formally rational framework.

Encountering ontological nebulosity, rationalism typically misinterprets it as either representational vagueness or epistemological uncertainty. However, nebulosity is not a matter of linguistic sloppiness or of ignorance, but of there existing no definite, absolutely true answers to most questions—however they are stated.

Nebulosity negates any possibility of strong claims for rationality. Most rationalisms could work only if beliefs were either absolutely true or absolutely false. That means they need to deny or ignore nebulosity. If you start exploring ontological questions, nebulosity becomes obvious, so rationalisms generally work hard to ignore them all, considering epistemology only.

But completely separating epistemology and ontology is impossible. Most beliefs are about things that are inherently nebulous. Then whether a belief is true or false is also nebulous—to some degree. Most facts about clouds and eggplants are not absolutely true, only true-enough for some purpose. Mostly the best we can get are “pretty much true” truths. No amount of additional information would resolve these into absolute ones. Part One is largely about why that is, what it implies.

Rationalism often bases explicit denials of nebulosity on fundamental physics. There are two steps in the typical argument. Subatomic particles have absolutely definite properties, described by quantum field theory, which is absolute truth. (Let us grant this claim for the sake of the argument.5) The second step: everything is made out of particles, so everything is also absolutely definite. Thus, the world is well-behaved, and there is an absolute truth to everything, even if we currently don’t know it. Quantum physics gives the correct ontology; that’s a solved problem.

The second step doesn’t follow (as we’ll see later). Quantum physics doesn’t have much to say about what I’ll call “the eggplant-sized world.” That extends from roughly the size of bacteria up—although size as such is not the issue. It is that the objects, categories, properties, and relationships we care about are not, in practice, understandable in quantum terms. Yet we can and do apply rationality effectively at the eggplant scale; this needs an alternative explanation (in Part Three).

Part One repeatedly asks: “What sort of world would rationalism be true of?” What would it take to make a guarantee about rationality that could stick? Broadly, the answer is: a world without nebulosity; a world in which all objects, categories, properties, and relationships were perfectly definite.

Adding machinery

The rationalisms I discuss each take their criterion of rationality from some particular formal system. They promise correctness or optimality based on the reasons the formalism is correct or optimal in its own terms. For instance, if you start from absolutely true beliefs, logic allows you to find other absolutely true beliefs. This fact about logic is true, absolutely.

Each rationalism fails when its idealized concepts of truth and belief collide with some nebulous aspect of the world. A proof that a formal system works correctly internally is irrelevant to the question of how it relates to the concrete external world. Rationalisms direct your attention away from that, because none has a worked-out explanation of how formalism engages with clouds, eggplants, or jam. Meta-rationalism does explain that, and it emphasizes the value of paying attention to the interface.

Each rationalist theory failed for practical, technical reasons. From the outside, one can see that nebulosity was the underlying cause in each case; but viewed from inside, each trouble spot looked quite different, and it seemed reasonable that an additional technical device could fix it. So, encountering difficulty, rather than saying “this whole project seems not to be working, we need to step back and come up with Plan B,” rationalists plowed ahead, creating more complex variants of their system. Each rationalism added more conceptual machinery of its favorite type, rather than asking if that type of machinery was suitable for the job.6

- Whenever it became clear that standard mathematical logic could not handle a particular problem, logicists bolted on more logic-stuff that was supposed to address it. When you point out that the extension doesn’t actually come to grips with the problem, and that it’s incompatible with all the other extensions, logicists say “well, yes, but something like this has to work.”

- When you point out that probabilistic inference always depends on debatable, somewhat arbitrary choices in setting up a problem description, probabilists suggest that you could try all possibilities in parallel.7 When you point out that this is impossible, they say “well, yes, but it’s the correct approach in principle.”

Rationalisms have some response to every objection. Critics point out that the responses don’t work. Rationalists respond in turn; such disputes often go many layers deep. To make Part One finite, we’ll only take the analysis a couple of steps in any direction.

Eventually one just has to say “This contraption has gotten awfully complicated, and mostly doesn’t seem to work in practice. Perhaps you will be able fix it someday with even more machinery, but that seems increasingly unlikely. And we do have a better alternative!”

This is not history

Nowadays, rationalism operates only as a metaphysical belief system: an unfounded certainty, based on imaginary understanding, that rationality must somehow always work.8

Historically, though, rationalism was a serious and credible intellectual project: to justify rationality by applying it to itself. The goal was a well-defined, detailed, rational explanation of what rationality is and why it works. Initially, there was no obvious reason this should not have succeeded.

Part One presents a series of rationalist theories that roughly recapitulate the development of rationalist thought. However, the goal here is not historical detail or accuracy, but understanding the intrinsic reasons that made each added complication necessary—as the failure modes of successive models came into view.9

Beginning with the Ancient Greeks, rationalists strengthened and elaborated the theory that rationality is the correct or optimal way of thinking and acting. They developed arguments that, for instance, Reason should be the Monarch that rules the Passions. Although increasingly sophisticated, these now seem naive and confused. By the mid-1700s rationalist theories were starting to turn up troubling anomalies.

It was only when armed with mathematical logic, developed in the late 1800s, that rationalists could tackle seriously the questions “so what is rationality exactly?” and “what proof do we have that it always works?” It was a major shock to discover, starting in the 1930s, that we can’t say exactly what it is, and it doesn’t always work. Tested seriously, rationalism fails not for one reason, but for dozens, any one of which would be fatal. The intellectual powertools logicians developed to prove rationalism correct instead proved the opposite.

- 1.Rationalism, taken seriously, requires operations that do not seem possible in practice. As philosophy, rationalism suggests that they must nevertheless be possible in principle. I believe they are mainly not possible even in principle, and sometimes I will sketch reasons. These partial theoretical explanations provide intuitions, rather than a knock-down philosophical proof. Philosophical proofs occasionally change individual philosophers’ minds, but never seem to defeat philosophical positions. Ways of thinking eventually go out of fashion, but not because someone shows they are definitively wrong. Showing a better alternative is more effective. Even then, philosophy progresses one funeral at a time; no position is so silly that diehards will cease defending it.

- 2.The term “ontology” is itself highly nebulous, with no generally accepted definition, and different philosophers use it differently. See the Stanford Encyclopedia of Philosophy article “Logic and Ontology.”

- 3.The Celestial Emporium of Benevolent Knowledge is an imaginary encyclopedia quoted by Jorge Luis Borges in “The Analytical Language of John Wilkins.” The non-fictional Wilkins proposed a formal, rational ontology in 1668. Borges’ essay suggests that this is impossible.

- 4.There are more obstacles to rationality than these three. For example, computational complexity theory puts practical limits on reasoning; this book doesn’t discuss that.

- 5.There are two grounds for doubt: quantum indeterminacy, and physicists’ certainty that the existing quantum field theory is not correct. Nothing in The Eggplant relies on these doubts, however, so it simplifies the discussion to ignore them.

- 6.Philip E. Agre’s Computation and Human Experience, pages 38-48, discusses this pattern in depth. “Ideas are made into techniques, these techniques are applied to problems, practitioners try to make sense of the resulting patterns of promise and trouble, and revised ideas result. Inasmuch as the practitioners’ perceptions are mediated by their original worldview, and given the near immunity of such worldviews from the pressures of practical experience, the result is likely to be a series of steadily more elaborate version of a basic theme. The general principle behind this process will become clear only once the practitioners’ worldview comes into question, that is, when it becomes possible to see this worldview as admitting alternatives. Until then, the whole cycle will make perfect sense in its own terms, except for an inchoate sense that certain general classes of difficulties never seem to go away.”

- 7.Solomonoff induction is a popular version of this ruse.

- 8.In the language of Meaningness, rationalism is an eternalism.

- 9.Historians may be offended by my impressionistic narrative, objecting that I’ve omitted important developments, lumped together people who had significant disagreements, and simplified subtle philosophical theories to the point of inaccuracy.